Rstudio Web Scraping

rvest is new package that makes it easy to scrape (or harvest) data from html web pages, inspired by libraries like beautiful soup. It is designed to work with magrittr so that you can express complex operations as elegant pipelines composed of simple, easily understood pieces. Install it with:

rvest in action

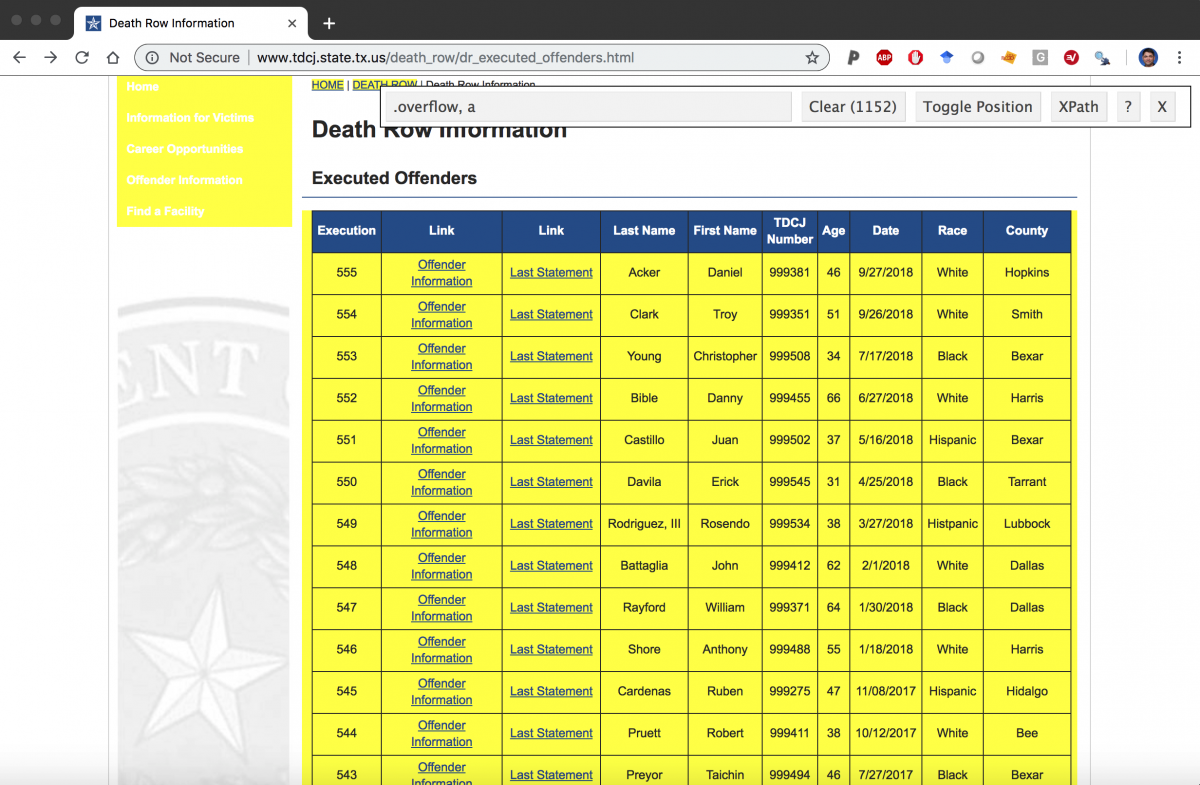

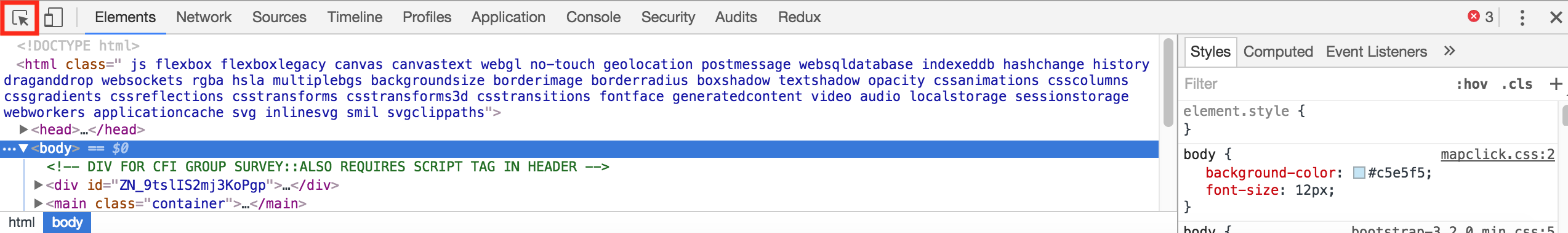

Mar 15, 2021 In general, web scraping in R (or in any other language) boils down to the following three steps: Get the HTML for the web page that you want to scrape Decide what part of the page you want to read and find out what HTML/CSS you need to select it Select the HTML and analyze it in the way you need. How to automatically web scrape periodically so you can analyze timely/frequently updated data.There are many blogs and tutorials that teach you how to scrap. Web Scraping in R: rvest Tutorial Explore web scraping in R with rvest with a real-life project: extract, preprocess and analyze Trustpilot reviews with tidyverse and tidyquant, and much more! Trustpilot has become a popular website for customers to review businesses and services.

To see rvest in action, imagine we’d like to scrape some information about The Lego Movie from IMDB. We start by downloading and parsing the file with html():

The goal of web scraping is to take advantage of the pattern or structure of web pages to extract and store data in a format suitable for data analysis. Apr 10, 2020 Scraping Links & Downloading Files The line of code below gets the scrape started by identifying and reading the html of the Github repository that contains the pdf of the RStudio Cheatsheets. Page rstudio/cheatsheets').

To extract the rating, we start with selectorgadget to figure out which css selector matches the data we want: strong span. (If you haven’t heard of selectorgadget, make sure to read vignette('selectorgadget') - it’s the easiest way to determine which selector extracts the data that you’re interested in.) We use html_node() to find the first node that matches that selector, extract its contents with html_text(), and convert it to numeric with as.numeric():

We use a similar process to extract the cast, using html_nodes() to find all nodes that match the selector:

The titles and authors of recent message board postings are stored in a the third table on the page. We can use html_node() and [[ to find it, then coerce it to a data frame with html_table():

Other important functions

If you prefer, you can use xpath selectors instead of css:

html_nodes(doc, xpath = '//table//td')).Extract the tag names with

html_tag(), text withhtml_text(), a single attribute withhtml_attr()or all attributes withhtml_attrs().Detect and repair text encoding problems with

guess_encoding()andrepair_encoding().Navigate around a website as if you’re in a browser with

html_session(),jump_to(),follow_link(),back(), andforward(). Extract, modify and submit forms withhtml_form(),set_values()andsubmit_form(). (This is still a work in progress, so I’d love your feedback.)

To see these functions in action, check out package demos with demo(package = 'rvest').

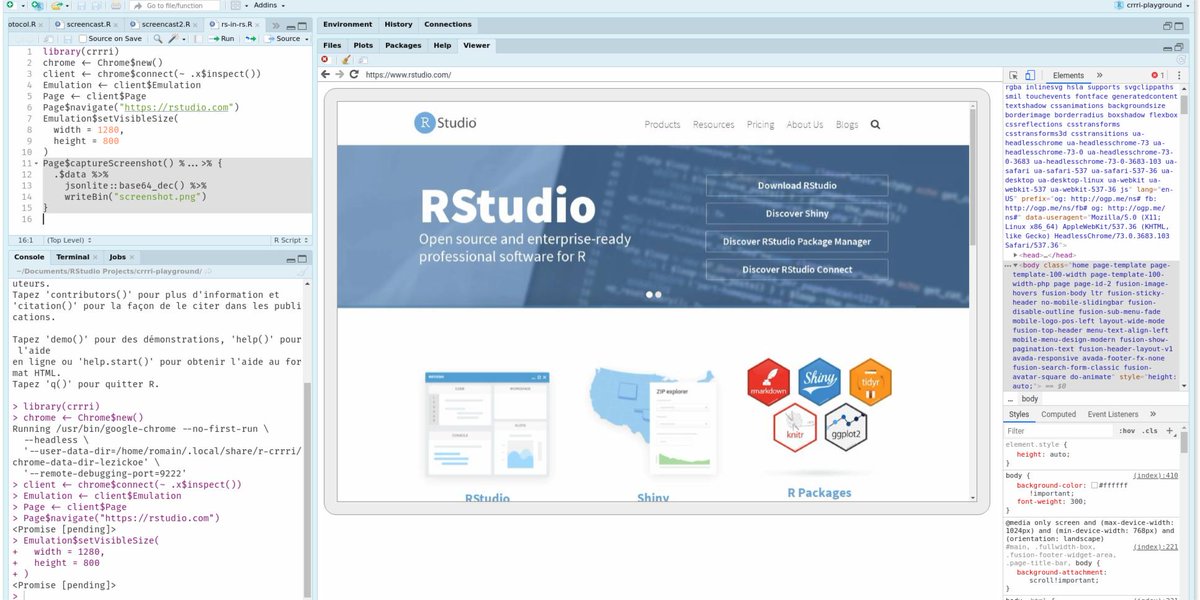

In this tutorial i will explain how the RSelenium r package can be used to scrape data from a Dynamic web page with login requirement easily.

Like so many of you i have also asked myself if it is possible to predict the result of the virtual football matches we play online using a machine learning model. Well, in this post i am going to show you how i was able to satisfy my curiosity with R programming and the package called RSelenium, if you are an R programmer chances are that you have used R’s popular rvest package for web scraping but yet to explore the RSelenium package. The RSelenium package is a good web browser automation tool that can serve as a bot in place of human to automate certain tasks. One of the difference between the RSelenium package and the rvest package is that unlike rvest that loads only the html content of a web page the RSelenium package loads everything including the page’s Javascript content and therefore may take a bit longer to deliver than rvest depending on internet speed among other things. You can however use RSelenium in an headless mode so that all its graphical content won’t display to improve the speed but in this tutorial we will display all content.

Some RSelenium methods and how they can be used

- $navigate() –>for navigating to urls pages

- $findElement() –>for identifying elements within a web page

- $getElementText() –>for getting the text of elements in a web page

- $sendKeysToElement() –>for sending values into an input field within a web page

- $clickElement() –>for clicking elements within a web page

- $switchToFrame() –>for switching between frames within a web page

- $switchToWindow() –>for switching from one window to another

- $open() –>for opening a webBrowser client

- $close() –>for closing a webBrowser client

RSelenium server requires java runtime so you need to make sure that you have java runtime installed in your system before proceeding..

we start by loading the RSelenium package:

if you don’t have the RSelenium package installed you can first use the code below to install before loading for use

Now we start a selenium server and browser with the rsDriver function and store it in a variable named rd(short for remote driver) and create another variable “remdr” that will handle how we control the browser.

you should see something like this

NOTE: if you are using the rsDriver function for the first time what you will see will be different from mine but after the first run your output should be similar to mine (for this lesson i used Windows 10).

By this time your firefox browser is triggered and is ready to work according to your instructions

We now have access to all the methods that is contained in the remdr variable that we created earlier, we can navigate to web pages and do a lot of interesting things from now.

Now we will use the selector gadget add-on in chrome browser to locate the elements we are interested in within any page. If you have not used the selector gadget add-on before here is a link to a quick guide on how to begin or use your browser’s inspect element option by just right clicking at any particular place of interest and select “inspect” / “inspect element” .

we first locate the username element and store it in a variable called username

What Is Web Scraping

Please refer to this post for the modelling part…

Rstudio Download Page

Wrap-up

I use both rvest and RSelenium for web scraping and i must say, both are excellent tools that will always get the job done, the rvest package has a lot of people using it compared to the RSelenium package so you will most likely get solutions online for most rvest related problems than RSelenium.

Rstudio Web Scraping Tutorial

Related